Artificial Intelligence—two words that have been shaking up investing circles, startup founders, businesses across sizes/sectors over the last couple of years. Business owners are racing to integrate AI into their operations, to retain their competitive edge and stay relevant in a fast paced and rapidly evolving technology landscape. Startup founders are reimagining their business models, and investors are reshaping their portfolios, seeking to capitalize on what many believe is the most transformative technological shift since the rise of the internet and the mobile phone.

Over the past five decades, waves of excitement about Artificial Intelligence have been followed by periods of skepticism. But this time feels different. The breakthroughs in AI aren’t just theoretical—they’re tangible, commercial, and accelerating at an unprecedented pace. From generative AI to autonomous systems, the momentum is undeniable, and the implications are profound.

As technology investors, we’ve been closely observing this evolution, cutting through the noise to identify real opportunities amid the frenzy. This thesis is our attempt to demystify some parts of the AI landscape—separating hype from substance—and sharing where we see the most promising investment opportunities in the near future.

Artificial intelligence has evolved dramatically, progressing from simple rule-based systems in the 1950s to today's sophisticated foundation models. Early AI relied on decision trees and explicit programming, which offered limited adaptability. The 1990s brought statistical methods that provided more flexibility. However, the true AI revolution began with the rise of deep learning—particularly Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs)—which enabled breakthroughs in computer vision and speech recognition. Alongside this, Reinforcement Learning (RL) emerged as a powerful paradigm, driving advancements in decision-making systems, robotics, and game-playing AI.

The next quantum leap came with Generative Adversarial Networks (GANs), which gave AI the ability to create—generating images, videos, and text—adding an entirely new dimension to AI capabilities. While this advancement was remarkable, the landscape shifted dramatically in 2017 with transformer models like BERT and GPT, which revolutionized natural language processing (NLP). Transformers unlocked unprecedented generalization abilities and made AI far more adaptable across diverse tasks.

The current frontier in AI, beyond improving existing models and building industry-specific use cases, is the pursuit of Artificial General Intelligence (AGI) — a complex and evolving concept where machines would be able to reason, learn, and adapt across diverse domains like humans. Once fully realized, AGI will fundamentally change how AI interacts with humans and performs tasks across industries, creating a profound and lasting transformation in both technology and society.

The global AI market has experienced exponential growth over the past decade, with current valuations reaching hundreds of billions of dollars. AI economy holds immense potential and is expected to grow to around a $2 trillion market by 2030, driven by a robust compound annual growth rate (CAGR).[1]

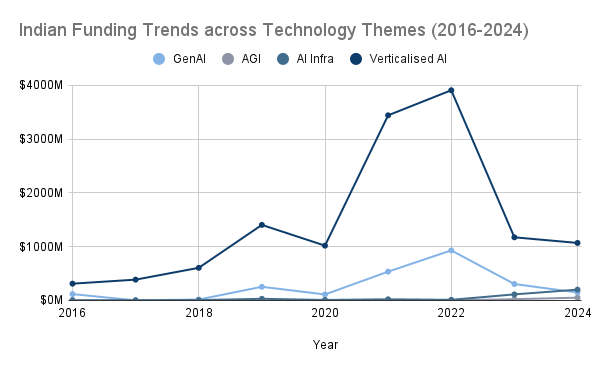

Funding Trends across Technology Themes**

Market Landscape: Technology Themes

Generative AI (GenAI) refers to a new class of AI models capable of creating original content—text, images, code, audio—by learning patterns from vast datasets. Unlike traditional AI, which is often task-specific and rule-driven, GenAI models like GPT, Gemini, and Claude are flexible, general-purpose engines that can reason, summarize, compose, and converse through natural language interfaces.

So, why is GenAI at the centre of today’s technology conversation?

Simply put, the barrier to building with AI has dropped dramatically. Foundational models are now available via APIs, eliminating the need for massive in-house AI teams. This has enabled a surge of experimentation and real-world deployment. From coding copilots and AI sales agents to clinical documentation tools, GenAI is reshaping software and workflow design across industries.

Yet despite the momentum, commercialisation is still early. Many enterprise use cases remain in pilot mode, and challenges around data quality, cost-efficiency, compliance, and trust still limit broad-scale adoption.

The sections that follow unpack where GenAI is gaining real traction—and where we believe the most compelling opportunities exist across models, applications, and infrastructure.

Artificial intelligence has evolved from being a niche technology to becoming a foundational component of infrastructure across industries. While foundational models were once confined to research labs, they are now widely accessible via APIs, enabling diverse AI applications. This has drastically reduced the complexity and cost of AI implementation, enabling organizations to leverage capabilities that once required substantial in-house expertise. Technologies like containerization, specialized hardware, and MLOps have streamlined AI deployment, making it more scalable and seamlessly integrated into business operations.

This transformation has led to three distinct business models in AI:

AI-native companies are built ground-up around AI as their core product and differentiator. These companies leverage foundational models and GenAI to unlock entirely new workflows, products, or user experiences. They typically operate with lean, technical teams and ship fast, innovation-first offerings.

Examples include Anthropic, Character.AI, and our portfolio company Ayna, which enables fashion brands to generate professional catalog imagery using AI.

AI-enabled enterprises, on the other hand, embed AI into existing business models to enhance automation, decision-making, and efficiency.

In our portfolio, companies like Awiros (computer vision), A5G Networks (network intelligence), CloudSEK (cyber threat intelligence), and SecureThings (automotive cybersecurity) exemplify this model—leveraging AI to solve domain-specific challenges at scale.

Infrastructure Enablers, companies providing the tools and infrastructure to accelerate AI adoption—offering APIs, MLOps platforms, model hosting, data labeling, or custom chips. They lower the barrier to entry for others to build, scale, and maintain AI solutions. Examples: Hugging Face (model APIs), Nvidia (GPUs), Graphcore, Cerebras.

While often used interchangeably, foundational models and LLMs aren’t the same.

Global leaders such as OpenAI, Anthropic, and Google DeepMind have been pioneers in the development of these massive models, driving innovation and laying the foundation for enterprise-level applications.

While Foundational Models and Large Language Models have been grabbing the attention of investors and larger AI community, we also wanted to draw attention to other nuances in this space.

Regional LLMs: Regional LLMs, with models ranging from 2–50 billion parameters, like Sarvam.ai, Ola’s Krutrim or Project Indus are focused on solving for regional use cases. These models can be fine-tuned for specific industries or regional languages, providing a better performance-to-cost ratio compared to massive models like LLaMA. Smaller LLMs also significantly reduce the risk of hallucinations (i.e., generating incorrect or irrelevant content) because they are trained on region and domain specific data, leading to higher accuracy and more reliable outputs.

Tuning Open-Source LLMs: An exciting and growing opportunity lies in tuning existing open-source models for domain-specific needs. Open-source frameworks like Hugging Face’s Transformers and Meta’s LLaMA have made it easier for startups to fine-tune pre-existing models for specific tasks without needing to build models from scratch. This approach is increasingly attractive from an investment standpoint, as it allows companies to quickly adapt models for sectors like agriculture, education, and logistics.

A notable example is Bhasha Daan in India, which focuses on localizing LLMs for Indian languages and specific industry domains, showcasing how open-source models can be tailored to address local market needs.

When it comes to tasks like coding, generalized LLMs typically achieve around 80% accuracy. However, localized models—those fine-tuned for specific languages or regional needs—can hit up to 85% accuracy.

One of the key challenges we see with Foundational and Regional Models is the substantial ongoing investment required for maintenance i.e. continuous updates to models, fine-tuning, and significant capital requirements make them high-risk ventures. Additionally, effective usage of these models demands a deep understanding of complex prompting, requiring users to actively manage their problem-solving approach.

AI is no longer a one-size-fits-all tool – it’s fast becoming deeply sector-specific. Across industries, companies are building AI solutions tailored to the unique needs of each vertical, creating competitive moats and tangible business value. Vertical AI excels where general-purpose tools fall short. Designed to automate entire processes, they’re bringing precision and efficiency to tasks that have long relied on human effort.

These Vertical AI solutions range from fully automated agents that handle tasks end-to-end to assistive copilots that augment human decision-making (often both, depending on the use-case). Companies are developing AI assistants tuned to the unique workflows and jargon of fields like healthcare, finance, and enterprise services.

The two primary categories of AI systems—AI Copilots and AI Agents—are paving the way for these advancements.

AI Copilots vs. AI Agents

The Vertical AI market, valued at $5.1 billion in 2024, is projected to soar to $47.1 billion by 2030 and could potentially exceed $100 billion by 2032.[2]

Some of the sectors in which we see AI applications are listed below

Market Landscape: AI in Industrial Applications

As Generative AI scales, infrastructure has become a critical bottleneck—and opportunity. AI models are growing more powerful, but their compute demands are surging faster than traditional hardware can keep up. Since 2012, the compute required to train top models has doubled roughly every 3.4 months—far outpacing Moore’s Law.[3] Training a model like GPT-4 is estimated to cost over $100 million, pushing startups to look for smarter, not bigger, solutions.[4]

This demand for compute has positioned hardware and optimization technologies as key areas of investment. AI infrastructure layers, including chips, data centers, and software optimization frameworks, are critical to powering AI applications, making these areas attractive for investors who are looking to capture value from the growing AI ecosystem.

Optimization Potential for Lower Compute Usage:

While the computational demands of AI models are ever-increasing, there is a significant opportunity for optimization. Optimization techniques can help reduce the compute footprint without compromising performance, offering an attractive path forward for AI companies.

We're also seeing next-gen architectures emerge which aim to deliver high performance at lower computational cost. DeepSeek’s R1 and OpenAI’s o3-mini are already setting benchmarks in efficient reasoning with lower latency and smaller footprints.

We believe that as Generative AI continues to evolve, the need for more efficient architectures will drive innovation towards alternative model designs that reduce computational demands. Researchers and companies are already exploring alternatives, such as state space models, sparse models, mixture-of-experts architectures, and neuromorphic computing, which could one day replace the need for transformers in certain applications.

At Inflexor, we’re excited about the growing potential of verticalized AI solutions in India. While the country’s AI ecosystem is expanding, we believe the next major leap lies in the deep customization of AI models to address specific business challenges. Startups must and will go beyond adapting generic models as a wrapper and focus on creating fine-tuned, task-specific models that solve unique industry problems.India's diverse economy offers numerous opportunities for verticalized AI across various sectors, as highlighted earlier. From consumer tech and healthcare to fintech and enterprise software, Indian startups are leveraging the power of specialized AI solutions to address unique industry challenges.

The Path Forward:

For Indian startups, the keys to success in verticalized AI lies in:Successful startups in this space will need to invest heavily in their engineering and research capabilities, assembling interdisciplinary teams with expertise in machine learning, domain-specific knowledge, and data engineering. These teams must be relentlessly focused on iterating on their models, continuously optimising their model architectures, loss functions, and training techniques to eke out those final percentage points of accuracy.

Successful startups in this space will need to invest heavily in their engineering and research capabilities, assembling interdisciplinary teams with expertise in machine learning, domain-specific knowledge, and data engineering. These teams must be relentlessly focused on iterating on their models, continuously optimising their model architectures, loss functions, and training techniques to eke out those final percentage points of accuracy.

While open-source and foundational models can provide a strong starting point, enabling startups to reach 80% accuracy on various tasks, we believe that the key to customer success lies in the pursuit of that final 20% of performance improvement.

Startups that are laser-focused on optimizing their models, backed by quality data science teams and data-driven metrics, will be the ones that outperform their competitors. Even incremental gains from 80% accuracy can lead to exponentially better results, unlocking new avenues for value creation and market capture.

We believe that startups that can master this delicate balance of technical innovation, data-driven optimization, and domain-specific expertise will be the ones that emerge as leaders in their respective verticals. These are the companies that we are most eager to support – those with the vision, the drive, and the talent to push the boundaries of what's possible with generative AI and unlock the true potential of the last mile.

In the rapidly evolving world of AI, the relationship between data quality and model performance is more important than ever. High-quality data drives better AI models, which in turn generates more valuable data, creating a self-sustaining loop that propels both AI capabilities and business growth. Having access to unique datasets, particularly those not available to the general public, provides an immense advantage. It allows for the creation of specialized models that outperform general-purpose ones in specific tasks.

We believe that proprietary data, coupled with Anthropic’s Model Context Protocols (MCP), represents a game-changing opportunity for startups. These protocols go beyond traditional fine-tuning by creating flexible frameworks for injecting real-time, context-specific information into AI model inference.

By leveraging proprietary datasets, startups can create hyper-specialized AI solutions that offer unparalleled accuracy and relevance within specific domains.

The ability to dynamically inject organizational context into AI models during inference is a key differentiator. This approach transforms AI from a generic tool into a true extension of organizational intelligence. We're particularly excited about startups developing secure infrastructures and protocols that enable seamless, real-time data integration while respecting strict governance requirements.

As Generative AI becomes increasingly accessible, affordable and hence highly replicable, we believe the core AI models are likely to become commoditized, with the real differentiation emerging in how these models are implemented, distributed, and presented to end-users. In this rapidly evolving ecosystem, we believe that intuitive workflows, effective distribution strategies and exceptional user experiences will become the crucial moats for successful AI startups.

An intuitive, engaging user interface can be the deciding factor in widespread adoption and user retention. As Generative AI capabilities become more ubiquitous, we expect the winners in this space to be those who excel in creating user-centric experiences, efficient distribution channels, and seamless integrations into existing workflows. Startups that focus on these aspects, treating the AI model as a powerful tool rather than the end product itself, will be best positioned to create lasting value and achieve market dominance in a competitive AI landscape.

In the recent times, there has been a raging debate amongst the Indian technology giants whether or not India should be focusing on building LLMs.

We at Inflexor believe that while the well funded large companies will continue to build the general purpose Large Language Models, it may not be always cost efficient for companies to use these LLMs for all use cases. Small language models (SLMs) have the potential to accelerate AI adoption in more organizations, and for more use cases.

SLMs excel in real-time decision-making, even in remote areas with limited connectivity, thanks to their ability to operate offline and at the edge. Designed for specialized applications and heightened security needs, these models are trained on domain-specific datasets and have significantly fewer parameters than large language models (LLMs). Their independence from cloud-based recall makes them particularly valuable in highly regulated industries where privacy is paramount.

We believe that in the near term to the long term, organisations will begin to adopt a “mix and match” approach i.e. organisations will continue to use LLMs for general purpose use cases and to handle complex queries and smaller models will handle simpler tasks and specific uses cases tailored to the organisation’s needs. This change in approach will be driven by the organisation’s need to optimise on costs and resources.

While the spotlight today is on Generative AI (GenAI), we also want to draw attention to traditional AI—comprising technologies like Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), and classical machine learning models—has reached a high level of maturity. These models have been battle-tested across industries, delivering efficiency, accuracy, and scalability in well-defined tasks.

Unlike GenAI, which excels in generating novel content, traditional AI thrives in structured problem-solving, where precision and deterministic outcomes are critical. Robotics relies on computer vision powered by CNNs for object detection and navigation, cybersecurity employs anomaly detection algorithms to flag potential threats, and network optimization leverages predictive models to enhance efficiency. These are just a few examples of how traditional AI continues to drive innovation in practical, high-stakes domains.

Moreover, Generative AI is not always the best or the most cost-effective solution. Many industrial and enterprise applications still benefit more from established AI techniques due to their lower computational demands and explainability. Manufacturing processes use AI-driven defect detection systems that operate with high accuracy without the need for generative capabilities. Financial institutions depend on machine learning models for fraud detection and risk assessment, areas where interpretability is essential.

While we are bullish on the transformative potential of GenAI, the foundational role of traditional AI remains indispensable, and we strongly believe that the two will coexist, complementing each other in a rapidly evolving AI landscape.

At Inflexor, we believe that the next wave of AI disruption will be defined not just by foundational models, but by how effectively they are adapted to solve real-world problems. The democratization of AI tooling and open-source models has lowered the barrier to entry—but the winners will be startups that combine deep domain knowledge, quality proprietary data, and world-class teams to push beyond the generic 80% threshold into truly differentiated solutions.

[1] https://www.forbes.com/councils/forbestechcouncil/2025/01/10/ai-amplifying-human-ingenuity/

**All Graphs used below are made with the help of Data from Tracxn

[2] https://aimresearch.co/ai-startups/vertical-ai-agents-will-dominate-2025#:~:text=The Vertical AI market%2C valued,the economic potential is enormous.

[3] https://openai.com/index/ai-and-compute/

[4] Sam Altman - https://www.wired.com/story/openai-ceo-sam-altman-the-age-of-giant-ai-models-is-already-over/